It's called Core

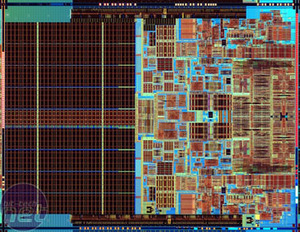

Intel's Core architecture is based on five key elements that collectively help the Core 2 Duo processors reach some new performance heights. These are known as Intel Wide Dynamic Execution, Intel Intelligent Power Capability, Intel Advanced Smart Cache, Intel Smart Memory Access and Intel Advanced Digital Media Boost. Each element contributes to the success of Core and collectively they are worth more than the sum of all five elements individually. We'll spend some time going over each element, detailing how it helps to contribute to the success of Core.Wide Dynamic Execution:

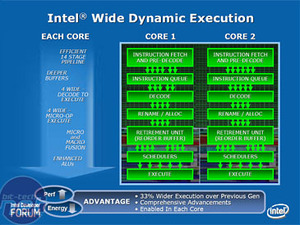

Just like GPUs, CPUs have a number of execution units. The number of execution units - or pipelines - dictates how many instructions-per-clock (IPC) the processor can do simultaneously. Both the NetBurst architecture and Pentium M architecture had three execution units per core, while the Core architecture is capable of executing four instructions per clock, per core. This means that a Core-based chip is capable of processing 33% more instructions per clock at the same clock speed.

The pipeline width is not the only thing changed in Core's execution units - Intel has also redesigned the length of the pipeline. While width focuses on how many instructions can be executed at the same time, it doesn't focus on how much can be done to each particular instruction. This is where the pipeline length comes in.

There are no rules on how long or short a pipeline should be, because it ultimately depends on the type of instruction you're trying to execute - long instructions benefit from long pipelines, while short instructions benefit from short pipelines. In order to get a good all-round performer, you need to make trade-off's, finding where the sweet spot is in terms of pipeline length. Intel has opted to use a 14-stage pipeline in the Core architecture - it's a compromise between a very long pipeline, and a very short pipeline, resulting in a more consistent all-round performer.

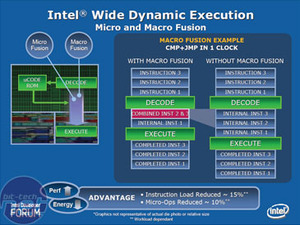

Intel has also optimised its micro-ops fusion technology, which basically goes through the instructions with a fine-toothed comb, eliminating the duplicate instructions before passing it onto the pipeline for execution. Along with micro-ops fusion, Core introduces macro-ops fusion too. As you would expect, this focuses on the bigger picture by working on the x86 instructions as they arrive. It combines duplicate instructions into a single micro-op, further reducing processor overhead, making for less work in the pipeline.

MSI MPG Velox 100R Chassis Review

October 14 2021 | 15:04

Want to comment? Please log in.